更新了爬虫和数据分析部分的文档

parent

a259a6abd3

commit

b2d309c6c1

|

|

@ -1,102 +0,0 @@

|

|||

## 数据库相关知识

|

||||

|

||||

### 范式理论

|

||||

|

||||

范式理论是设计关系型数据库中二维表的指导思想。

|

||||

|

||||

1. 第一范式:数据表的每个列的值域都是由原子值组成的,不能够再分割。

|

||||

2. 第二范式:数据表里的所有数据都要和该数据表的键(主键与候选键)有完全依赖关系。

|

||||

3. 第三范式:所有非键属性都只和候选键有相关性,也就是说非键属性之间应该是独立无关的。

|

||||

|

||||

> **说明**:实际工作中,出于效率的考虑,我们在设计表时很有可能做出反范式设计,即故意降低方式级别,增加冗余数据来获得更好的操作性能。

|

||||

|

||||

### 数据完整性

|

||||

|

||||

1. 实体完整性 - 每个实体都是独一无二的

|

||||

|

||||

- 主键(`primary key`) / 唯一约束(`unique`)

|

||||

2. 引用完整性(参照完整性)- 关系中不允许引用不存在的实体

|

||||

|

||||

- 外键(`foreign key`)

|

||||

3. 域(domain)完整性 - 数据是有效的

|

||||

- 数据类型及长度

|

||||

|

||||

- 非空约束(`not null`)

|

||||

|

||||

- 默认值约束(`default`)

|

||||

|

||||

- 检查约束(`check`)

|

||||

|

||||

> **说明**:在 MySQL 8.x 以前,检查约束并不起作用。

|

||||

|

||||

### 数据一致性

|

||||

|

||||

1. 事务:一系列对数据库进行读/写的操作,这些操作要么全都成功,要么全都失败。

|

||||

|

||||

2. 事务的 ACID 特性

|

||||

- 原子性:事务作为一个整体被执行,包含在其中的对数据库的操作要么全部被执行,要么都不执行

|

||||

- 一致性:事务应确保数据库的状态从一个一致状态转变为另一个一致状态

|

||||

- 隔离性:多个事务并发执行时,一个事务的执行不应影响其他事务的执行

|

||||

- 持久性:已被提交的事务对数据库的修改应该永久保存在数据库中

|

||||

|

||||

3. MySQL 中的事务操作

|

||||

|

||||

- 开启事务环境

|

||||

|

||||

```SQL

|

||||

start transaction

|

||||

```

|

||||

|

||||

- 提交事务

|

||||

|

||||

```SQL

|

||||

commit

|

||||

```

|

||||

|

||||

- 回滚事务

|

||||

|

||||

```SQL

|

||||

rollback

|

||||

```

|

||||

|

||||

4. 查看事务隔离级别

|

||||

|

||||

```SQL

|

||||

show variables like 'transaction_isolation';

|

||||

```

|

||||

|

||||

```

|

||||

+-----------------------+-----------------+

|

||||

| Variable_name | Value |

|

||||

+-----------------------+-----------------+

|

||||

| transaction_isolation | REPEATABLE-READ |

|

||||

+-----------------------+-----------------+

|

||||

```

|

||||

|

||||

可以看出,MySQL 默认的事务隔离级别是`REPEATABLE-READ`。

|

||||

|

||||

5. 修改(当前会话)事务隔离级别

|

||||

|

||||

```SQL

|

||||

set session transaction isolation level read committed;

|

||||

```

|

||||

|

||||

重新查看事务隔离级别,结果如下所示。

|

||||

|

||||

```

|

||||

+-----------------------+----------------+

|

||||

| Variable_name | Value |

|

||||

+-----------------------+----------------+

|

||||

| transaction_isolation | READ-COMMITTED |

|

||||

+-----------------------+----------------+

|

||||

```

|

||||

|

||||

关系型数据库的事务是一个很大的话题,因为当存在多个并发事务访问数据时,就有可能出现三类读数据的问题(脏读、不可重复读、幻读)和两类更新数据的问题(第一类丢失更新、第二类丢失更新)。想了解这五类问题的,可以阅读我发布在 CSDN 网站上的[《Java面试题全集(上)》](https://blog.csdn.net/jackfrued/article/details/44921941)一文的第80题。为了避免这些问题,关系型数据库底层是有对应的锁机制的,按锁定对象不同可以分为表级锁和行级锁,按并发事务锁定关系可以分为共享锁和独占锁。然而直接使用锁是非常麻烦的,为此数据库为用户提供了自动锁机制,只要用户指定适当的事务隔离级别,数据库就会通过分析 SQL 语句,然后为事务访问的资源加上合适的锁。此外,数据库还会维护这些锁通过各种手段提高系统的性能,这些对用户来说都是透明的。想了解 MySQL 事务和锁的细节知识,推荐大家阅读进阶读物[《高性能MySQL》](https://item.jd.com/11220393.html),这也是数据库方面的经典书籍。

|

||||

|

||||

ANSI/ISO SQL 92标准定义了4个等级的事务隔离级别,如下表所示。需要说明的是,事务隔离级别和数据访问的并发性是对立的,事务隔离级别越高并发性就越差。所以要根据具体的应用来确定到底使用哪种事务隔离级别,这个地方没有万能的原则。

|

||||

|

||||

<img src="https://gitee.com/jackfrued/mypic/raw/master/20211121225327.png" style="zoom:50%;">

|

||||

|

||||

### 总结

|

||||

|

||||

关于 SQL 和 MySQL 的知识肯定远远不止上面列出的这些,比如 SQL 本身的优化、MySQL 性能调优、MySQL 运维相关工具、MySQL 数据的备份和恢复、监控 MySQL 服务、部署高可用架构等,这一系列的问题在这里都没有办法逐一展开来讨论,那就留到有需要的时候再进行讲解吧,各位读者也可以自行探索。

|

||||

|

|

@ -0,0 +1,213 @@

|

|||

## Hive简介

|

||||

|

||||

Hive是Facebook开源的一款基于Hadoop的数据仓库工具,是目前应用最广泛的大数据处理解决方案,它能将SQL查询转变为 MapReduce(Google提出的一个软件架构,用于大规模数据集的并行运算)任务,对SQL提供了完美的支持,能够非常方便的实现大数据统计。

|

||||

|

||||

<img src="https://gitee.com/jackfrued/mypic/raw/master/20220210080608.png">

|

||||

|

||||

> **说明**:可以通过<https://www.edureka.co/blog/hadoop-ecosystem>来了解Hadoop生态圈。

|

||||

|

||||

如果要简单的介绍Hive,那么以下两点是其核心:

|

||||

|

||||

1. 把HDFS中结构化的数据映射成表。

|

||||

2. 通过把Hive-SQL进行解析和转换,最终生成一系列基于Hadoop的MapReduce任务/Spark任务,通过执行这些任务完成对数据的处理。也就是说,即便不学习Java、Scala这样的编程语言,一样可以实现对数据的处理。

|

||||

|

||||

Hive和传统关系型数据库的对比如下表所示。

|

||||

|

||||

| | Hive | RDBMS |

|

||||

| -------- | ----------------- | ------------ |

|

||||

| 查询语言 | HQL | SQL |

|

||||

| 存储数据 | HDFS | 本地文件系统 |

|

||||

| 执行方式 | MapReduce / Spark | Executor |

|

||||

| 执行延迟 | 高 | 低 |

|

||||

| 数据规模 | 大 | 小 |

|

||||

|

||||

### 准备工作

|

||||

|

||||

1. 搭建如下图所示的大数据平台。

|

||||

|

||||

|

||||

|

||||

2. 通过Client节点访问大数据平台。

|

||||

|

||||

|

||||

|

||||

3. 创建文件Hadoop的文件系统。

|

||||

|

||||

```Shell

|

||||

hadoop fs -mkdir /data

|

||||

hadoop fs -chmod g+w /data

|

||||

```

|

||||

|

||||

4. 将准备好的数据文件拷贝到Hadoop文件系统中。

|

||||

|

||||

```Shell

|

||||

hadoop fs -put /home/ubuntu/data/* /data

|

||||

```

|

||||

|

||||

### 创建/删除数据库

|

||||

|

||||

创建。

|

||||

|

||||

```SQL

|

||||

create database if not exists demo;

|

||||

```

|

||||

|

||||

或

|

||||

|

||||

```Shell

|

||||

hive -e "create database demo;"

|

||||

```

|

||||

|

||||

删除。

|

||||

|

||||

```SQL

|

||||

drop database if exists demo;

|

||||

```

|

||||

|

||||

切换。

|

||||

|

||||

```SQL

|

||||

use demo;

|

||||

```

|

||||

|

||||

### 数据类型

|

||||

|

||||

Hive的数据类型如下所示。

|

||||

|

||||

基本数据类型。

|

||||

|

||||

| 数据类型 | 占用空间 | 支持版本 |

|

||||

| --------- | -------- | -------- |

|

||||

| tinyint | 1-Byte | |

|

||||

| smallint | 2-Byte | |

|

||||

| int | 4-Byte | |

|

||||

| bigint | 8-Byte | |

|

||||

| boolean | | |

|

||||

| float | 4-Byte | |

|

||||

| double | 8-Byte | |

|

||||

| string | | |

|

||||

| binary | | 0.8版本 |

|

||||

| timestamp | | 0.8版本 |

|

||||

| decimal | | 0.11版本 |

|

||||

| char | | 0.13版本 |

|

||||

| varchar | | 0.12版本 |

|

||||

| date | | 0.12版本 |

|

||||

|

||||

复杂数据类型。

|

||||

|

||||

| 数据类型 | 描述 | 例子 |

|

||||

| -------- | ------------------------ | --------------------------------------------- |

|

||||

| struct | 和C语言中的结构体类似 | `struct<first_name:string, last_name:string>` |

|

||||

| map | 由键值对构成的元素的集合 | `map<string,int>` |

|

||||

| array | 具有相同类型的变量的容器 | `array<string>` |

|

||||

|

||||

### 创建和使用表

|

||||

|

||||

1. 创建内部表。

|

||||

|

||||

```SQL

|

||||

create table if not exists user_info

|

||||

(

|

||||

user_id string,

|

||||

user_name string,

|

||||

sex string,

|

||||

age int,

|

||||

city string,

|

||||

firstactivetime string,

|

||||

level int,

|

||||

extra1 string,

|

||||

extra2 map<string,string>

|

||||

)

|

||||

row format delimited fields terminated by '\t'

|

||||

collection items terminated by ','

|

||||

map keys terminated by ':'

|

||||

lines terminated by '\n'

|

||||

stored as textfile;

|

||||

```

|

||||

|

||||

2. 加载数据。

|

||||

|

||||

```SQL

|

||||

load data local inpath '/home/ubuntu/data/user_info/user_info.txt' overwrite into table user_info;

|

||||

```

|

||||

|

||||

或

|

||||

|

||||

```SQL

|

||||

load data inpath '/data/user_info/user_info.txt' overwrite into table user_info;

|

||||

```

|

||||

|

||||

3. 创建分区表。

|

||||

|

||||

```SQL

|

||||

create table if not exists user_trade

|

||||

(

|

||||

user_name string,

|

||||

piece int,

|

||||

price double,

|

||||

pay_amount double,

|

||||

goods_category string,

|

||||

pay_time bigint

|

||||

)

|

||||

partitioned by (dt string)

|

||||

row format delimited fields terminated by '\t';

|

||||

```

|

||||

|

||||

4. 设置动态分区。

|

||||

|

||||

```SQL

|

||||

set hive.exec.dynamic.partition=true;

|

||||

set hive.exec.dynamic.partition.mode=nonstrict;

|

||||

set hive.exec.max.dynamic.partitions=10000;

|

||||

set hive.exec.max.dynamic.partitions.pernode=10000;

|

||||

```

|

||||

|

||||

5. 拷贝数据(Shell命令)。

|

||||

|

||||

```Shell

|

||||

hdfs dfs -put /home/ubuntu/data/user_trade/* /user/hive/warehouse/demo.db/user_trade

|

||||

```

|

||||

|

||||

6. 修复分区表。

|

||||

|

||||

```SQL

|

||||

msck repair table user_trade;

|

||||

```

|

||||

|

||||

### 查询

|

||||

|

||||

#### 基本语法

|

||||

|

||||

```SQL

|

||||

select user_name from user_info where city='beijing' and sex='female' limit 10;

|

||||

select user_name, piece, pay_amount from user_trade where dt='2019-03-24' and goods_category='food';

|

||||

```

|

||||

|

||||

#### group by

|

||||

|

||||

```SQL

|

||||

-- 查询2019年1月到4月,每个品类有多少人购买,累计金额是多少

|

||||

select goods_category, count(distinct user_name) as user_num, sum(pay_amount) as total from user_trade where dt between '2019-01-01' and '2019-04-30' group by goods_category;

|

||||

```

|

||||

|

||||

```SQL

|

||||

-- 查询2019年4月支付金额超过5万元的用户

|

||||

select user_name, sum(pay_amount) as total from user_trade where dt between '2019-04-01' and '2019-04-30' group by user_name having sum(pay_amount) > 50000;

|

||||

```

|

||||

|

||||

#### order by

|

||||

|

||||

```SQL

|

||||

-- 查询2019年4月支付金额最多的用户前5名

|

||||

select user_name, sum(pay_amount) as total from user_trade where dt between '2019-04-01' and '2019-04-30' group by user_name order by total desc limit 5;

|

||||

```

|

||||

|

||||

#### 常用函数

|

||||

|

||||

1. `from_unixtime`:将时间戳转换成日期

|

||||

2. `unix_timestamp`:将日期转换成时间戳

|

||||

3. `datediff`:计算两个日期的时间差

|

||||

4. `if`:根据条件返回不同的值

|

||||

5. `substr`:字符串取子串

|

||||

6. `get_json_object`:从JSON字符串中取出指定的`key`对应的`value`,如:`get_json_object(info, '$.first_name')`。

|

||||

|

||||

|

|

@ -1,4 +1,4 @@

|

|||

## 第31课:网络数据采集概述

|

||||

## 网络数据采集概述

|

||||

|

||||

爬虫(crawler)也经常被称为网络蜘蛛(spider),是按照一定的规则自动浏览网站并获取所需信息的机器人程序(自动化脚本代码),被广泛的应用于互联网搜索引擎和数据采集。使用过互联网和浏览器的人都知道,网页中除了供用户阅读的文字信息之外,还包含一些超链接,网络爬虫正是通过网页中的超链接信息,不断获得网络上其它页面的地址,然后持续的进行数据采集。正因如此,网络数据采集的过程就像一个爬虫或者蜘蛛在网络上漫游,所以才被形象的称为爬虫或者网络蜘蛛。

|

||||

|

||||

|

|

|

|||

|

|

@ -1,4 +1,4 @@

|

|||

## 第32课:用Python获取网络数据

|

||||

## 用Python获取网络数据

|

||||

|

||||

网络数据采集是 Python 语言非常擅长的领域,上节课我们讲到,实现网络数据采集的程序通常称之为网络爬虫或蜘蛛程序。即便是在大数据时代,数据对于中小企业来说仍然是硬伤和短板,有些数据需要通过开放或付费的数据接口来获得,其他的行业数据和竞对数据则必须要通过网络数据采集的方式来获得。不管使用哪种方式获取网络数据资源,Python 语言都是非常好的选择,因为 Python 的标准库和三方库都对网络数据采集提供了良好的支持。

|

||||

|

||||

|

|

@ -1,4 +1,4 @@

|

|||

## 第33课:用Python解析HTML页面

|

||||

## 用Python解析HTML页面

|

||||

|

||||

在前面的课程中,我们讲到了使用`request`三方库获取网络资源,还介绍了一些前端的基础知识。接下来,我们继续探索如何解析 HTML 代码,从页面中提取出有用的信息。之前,我们尝试过用正则表达式的捕获组操作提取页面内容,但是写出一个正确的正则表达式也是一件让人头疼的事情。为了解决这个问题,我们得先深入的了解一下 HTML 页面的结构,并在此基础上研究另外的解析页面的方法。

|

||||

|

||||

|

|

@ -1,4 +1,4 @@

|

|||

## 第34课:Python中的并发编程-1

|

||||

## Python中的并发编程-1

|

||||

|

||||

现如今,我们使用的计算机早已是多 CPU 或多核的计算机,而我们使用的操作系统基本都支持“多任务”,这使得我们可以同时运行多个程序,也可以将一个程序分解为若干个相对独立的子任务,让多个子任务“并行”或“并发”的执行,从而缩短程序的执行时间,同时也让用户获得更好的体验。因此当下,不管用什么编程语言进行开发,实现“并行”或“并发”编程已经成为了程序员的标配技能。为了讲述如何在 Python 程序中实现“并行”或“并发”,我们需要先了解两个重要的概念:进程和线程。

|

||||

|

||||

|

|

@ -1,4 +1,4 @@

|

|||

## 第35课:Python中的并发编程-2

|

||||

## Python中的并发编程-2

|

||||

|

||||

在上一课中我们说过,由于 GIL 的存在,CPython 中的多线程并不能发挥 CPU 的多核优势,如果希望突破 GIL 的限制,可以考虑使用多进程。对于多进程的程序,每个进程都有一个属于自己的 GIL,所以多进程不会受到 GIL 的影响。那么,我们应该如何在 Python 程序中创建和使用多进程呢?

|

||||

|

||||

|

|

@ -1,4 +1,4 @@

|

|||

## 第36课:Python中的并发编程-3

|

||||

## Python中的并发编程-3

|

||||

|

||||

爬虫是典型的 I/O 密集型任务,I/O 密集型任务的特点就是程序会经常性的因为 I/O 操作而进入阻塞状态,比如我们之前使用`requests`获取页面代码或二进制内容,发出一个请求之后,程序必须要等待网站返回响应之后才能继续运行,如果目标网站不是很给力或者网络状况不是很理想,那么等待响应的时间可能会很久,而在这个过程中整个程序是一直阻塞在那里,没有做任何的事情。通过前面的课程,我们已经知道了可以通过多线程的方式为爬虫提速,使用多线程的本质就是,当一个线程阻塞的时候,程序还有其他的线程可以继续运转,因此整个程序就不会在阻塞和等待中浪费了大量的时间。

|

||||

|

||||

|

|

@ -1,4 +1,4 @@

|

|||

## 第37课:并发编程在爬虫中的应用

|

||||

## 并发编程在爬虫中的应用

|

||||

|

||||

之前的课程,我们已经为大家介绍了 Python 中的多线程、多进程和异步编程,通过这三种手段,我们可以实现并发或并行编程,这一方面可以加速代码的执行,另一方面也可以带来更好的用户体验。爬虫程序是典型的 I/O 密集型任务,对于 I/O 密集型任务来说,多线程和异步 I/O 都是很好的选择,因为当程序的某个部分因 I/O 操作阻塞时,程序的其他部分仍然可以运转,这样我们不用在等待和阻塞中浪费大量的时间。下面我们以爬取“[360图片](https://image.so.com/)”网站的图片并保存到本地为例,为大家分别展示使用单线程、多线程和异步 I/O 编程的爬虫程序有什么区别,同时也对它们的执行效率进行简单的对比。

|

||||

|

||||

|

|

@ -0,0 +1,281 @@

|

|||

## 使用Selenium抓取网页动态内容

|

||||

|

||||

根据权威机构发布的全球互联网可访问性审计报告,全球约有四分之三的网站其内容或部分内容是通过JavaScript动态生成的,这就意味着在浏览器窗口中“查看网页源代码”时无法在HTML代码中找到这些内容,也就是说我们之前用的抓取数据的方式无法正常运转了。解决这样的问题基本上有两种方案,一是获取提供动态内容的数据接口,这种方式也适用于抓取手机 App 的数据;另一种是通过自动化测试工具 Selenium 运行浏览器获取渲染后的动态内容。对于第一种方案,我们可以使用浏览器的“开发者工具”或者更为专业的抓包工具(如:Charles、Fiddler、Wireshark等)来获取到数据接口,后续的操作跟上一个章节中讲解的获取“360图片”网站的数据是一样的,这里我们不再进行赘述。这一章我们重点讲解如何使用自动化测试工具 Selenium 来获取网站的动态内容。

|

||||

|

||||

### Selenium 介绍

|

||||

|

||||

Selenium 是一个自动化测试工具,利用它可以驱动浏览器执行特定的行为,最终帮助爬虫开发者获取到网页的动态内容。简单的说,只要我们在浏览器窗口中能够看到的内容,都可以使用 Selenium 获取到,对于那些使用了 JavaScript 动态渲染技术的网站,Selenium 会是一个重要的选择。下面,我们还是以 Chrome 浏览器为例,来讲解 Selenium 的用法,大家需要先安装 Chrome 浏览器并下载它的驱动。Chrome 浏览器的驱动程序可以在[ChromeDriver官网](https://chromedriver.chromium.org/downloads)进行下载,驱动的版本要跟浏览器的版本对应,如果没有完全对应的版本,就选择版本代号最为接近的版本。

|

||||

|

||||

<img src="https://gitee.com/jackfrued/mypic/raw/master/20220310134558.png" style="zoom: 35%">

|

||||

|

||||

### 使用Selenium

|

||||

|

||||

我们可以先通过`pip`来安装 Selenium,命令如下所示。

|

||||

|

||||

```Shell

|

||||

pip install selenium

|

||||

```

|

||||

|

||||

#### 加载页面

|

||||

|

||||

接下来,我们通过下面的代码驱动 Chrome 浏览器打开百度。

|

||||

|

||||

```Python

|

||||

from selenium import webdriver

|

||||

|

||||

# 创建Chrome浏览器对象

|

||||

browser = webdriver.Chrome()

|

||||

# 加载指定的页面

|

||||

browser.get('https://www.baidu.com/')

|

||||

```

|

||||

|

||||

如果不愿意使用 Chrome 浏览器,也可以修改上面的代码操控其他浏览器,只需创建对应的浏览器对象(如 Firefox、Safari 等)即可。运行上面的程序,如果看到如下所示的错误提示,那是说明我们还没有将 Chrome 浏览器的驱动添加到 PATH 环境变量中,也没有在程序中指定 Chrome 浏览器驱动所在的位置。

|

||||

|

||||

```Shell

|

||||

selenium.common.exceptions.WebDriverException: Message: 'chromedriver' executable needs to be in PATH. Please see https://sites.google.com/a/chromium.org/chromedriver/home

|

||||

```

|

||||

|

||||

解决这个问题的办法有三种:

|

||||

|

||||

1. 将下载的 ChromeDriver 放到已有的 PATH 环境变量下,建议直接跟 Python 解释器放在同一个目录,因为之前安装 Python 的时候我们已经将 Python 解释器的路径放到 PATH 环境变量中了。

|

||||

|

||||

2. 将 ChromeDriver 放到项目虚拟环境下的 `bin` 文件夹中(Windows 系统对应的目录是 `Scripts`),这样 ChromeDriver 就跟虚拟环境下的 Python 解释器在同一个位置,肯定是能够找到的。

|

||||

|

||||

3. 修改上面的代码,在创建 Chrome 对象时,通过`service`参数配置`Service`对象,并通过创建`Service`对象的`executable_path`参数指定 ChromeDriver 所在的位置,如下所示:

|

||||

|

||||

```Python

|

||||

from selenium import webdriver

|

||||

from selenium.webdriver.chrome.service import Service

|

||||

|

||||

browser = webdriver.Chrome(service=Service(executable_path='venv/bin/chromedriver'))

|

||||

browser.get('https://www.baidu.com/')

|

||||

```

|

||||

|

||||

#### 查找元素和模拟用户行为

|

||||

|

||||

接下来,我们可以尝试模拟用户在百度首页的文本框输入搜索关键字并点击“百度一下”按钮。在完成页面加载后,可以通过`Chrome`对象的`find_element`和`find_elements`方法来获取页面元素,Selenium 支持多种获取元素的方式,包括:CSS 选择器、XPath、元素名字(标签名)、元素 ID、类名等,前者可以获取单个页面元素(`WebElement`对象),后者可以获取多个页面元素构成的列表。获取到`WebElement`对象以后,可以通过`send_keys`来模拟用户输入行为,可以通过`click`来模拟用户点击操作,代码如下所示。

|

||||

|

||||

```Python

|

||||

from selenium import webdriver

|

||||

from selenium.webdriver.common.by import By

|

||||

|

||||

browser = webdriver.Chrome()

|

||||

browser.get('https://www.baidu.com/')

|

||||

# 通过元素ID获取元素

|

||||

kw_input = browser.find_element(By.ID, 'kw')

|

||||

# 模拟用户输入行为

|

||||

kw_input.send_keys('Python')

|

||||

# 通过CSS选择器获取元素

|

||||

su_button = browser.find_element(By.CSS_SELECTOR, '#su')

|

||||

# 模拟用户点击行为

|

||||

su_button.click()

|

||||

```

|

||||

|

||||

如果要执行一个系列动作,例如模拟拖拽操作,可以创建`ActionChains`对象,有兴趣的读者可以自行研究。

|

||||

|

||||

#### 隐式等待和显式等待

|

||||

|

||||

这里还有一个细节需要大家知道,网页上的元素可能是动态生成的,在我们使用`find_element`或`find_elements`方法获取的时候,可能还没有完成渲染,这时会引发`NoSuchElementException`错误。为了解决这个问题,我们可以使用隐式等待的方式,通过设置等待时间让浏览器完成对页面元素的渲染。除此之外,我们还可以使用显示等待,通过创建`WebDriverWait`对象,并设置等待时间和条件,当条件没有满足时,我们可以先等待再尝试进行后续的操作,具体的代码如下所示。

|

||||

|

||||

```Python

|

||||

from selenium import webdriver

|

||||

from selenium.webdriver.common.by import By

|

||||

from selenium.webdriver.support import expected_conditions

|

||||

from selenium.webdriver.support.wait import WebDriverWait

|

||||

|

||||

browser = webdriver.Chrome()

|

||||

# 设置浏览器窗口大小

|

||||

browser.set_window_size(1200, 800)

|

||||

browser.get('https://www.baidu.com/')

|

||||

# 设置隐式等待时间为10秒

|

||||

browser.implicitly_wait(10)

|

||||

kw_input = browser.find_element(By.ID, 'kw')

|

||||

kw_input.send_keys('Python')

|

||||

su_button = browser.find_element(By.CSS_SELECTOR, '#su')

|

||||

su_button.click()

|

||||

# 创建显示等待对象

|

||||

wait_obj = WebDriverWait(browser, 10)

|

||||

# 设置等待条件(等搜索结果的div出现)

|

||||

wait_obj.until(

|

||||

expected_conditions.presence_of_element_located(

|

||||

(By.CSS_SELECTOR, '#content_left')

|

||||

)

|

||||

)

|

||||

# 截屏

|

||||

browser.get_screenshot_as_file('python_result.png')

|

||||

```

|

||||

|

||||

上面设置的等待条件`presence_of_element_located`表示等待指定元素出现,下面的表格列出了常用的等待条件及其含义。

|

||||

|

||||

| 等待条件 | 具体含义 |

|

||||

| ---------------------------------------- | ------------------------------------- |

|

||||

| `title_is / title_contains` | 标题是指定的内容 / 标题包含指定的内容 |

|

||||

| `visibility_of` | 元素可见 |

|

||||

| `presence_of_element_located` | 定位的元素加载完成 |

|

||||

| `visibility_of_element_located` | 定位的元素变得可见 |

|

||||

| `invisibility_of_element_located` | 定位的元素变得不可见 |

|

||||

| `presence_of_all_elements_located` | 定位的所有元素加载完成 |

|

||||

| `text_to_be_present_in_element` | 元素包含指定的内容 |

|

||||

| `text_to_be_present_in_element_value` | 元素的`value`属性包含指定的内容 |

|

||||

| `frame_to_be_available_and_switch_to_it` | 载入并切换到指定的内部窗口 |

|

||||

| `element_to_be_clickable` | 元素可点击 |

|

||||

| `element_to_be_selected` | 元素被选中 |

|

||||

| `element_located_to_be_selected` | 定位的元素被选中 |

|

||||

| `alert_is_present` | 出现 Alert 弹窗 |

|

||||

|

||||

#### 执行JavaScript代码

|

||||

|

||||

对于使用瀑布式加载的页面,如果希望在浏览器窗口中加载更多的内容,可以通过浏览器对象的`execute_scripts`方法执行 JavaScript 代码来实现。对于一些高级的爬取操作,也很有可能会用到类似的操作,如果你的爬虫代码需要 JavaScript 的支持,建议先对 JavaScript 进行适当的了解,尤其是 JavaScript 中的 BOM 和 DOM 操作。我们在上面的代码中截屏之前加入下面的代码,这样就可以利用 JavaScript 将网页滚到最下方。

|

||||

|

||||

```Python

|

||||

# 执行JavaScript代码

|

||||

browser.execute_script('document.documentElement.scrollTop = document.documentElement.scrollHeight')

|

||||

```

|

||||

|

||||

#### Selenium反爬的破解

|

||||

|

||||

有一些网站专门针对 Selenium 设置了反爬措施,因为使用 Selenium 驱动的浏览器,在控制台中可以看到如下所示的`webdriver`属性值为`true`,如果要绕过这项检查,可以在加载页面之前,先通过执行 JavaScript 代码将其修改为`undefined`。

|

||||

|

||||

<img src="https://gitee.com/jackfrued/mypic/raw/master/20220310154246.png" style="zoom:50%">

|

||||

|

||||

另一方面,我们还可以将浏览器窗口上的“Chrome正受到自动测试软件的控制”隐藏掉,完整的代码如下所示。

|

||||

|

||||

```Python

|

||||

# 创建Chrome参数对象

|

||||

options = webdriver.ChromeOptions()

|

||||

# 添加试验性参数

|

||||

options.add_experimental_option('excludeSwitches', ['enable-automation'])

|

||||

options.add_experimental_option('useAutomationExtension', False)

|

||||

# 创建Chrome浏览器对象并传入参数

|

||||

browser = webdriver.Chrome(options=options)

|

||||

# 执行Chrome开发者协议命令(在加载页面时执行指定的JavaScript代码)

|

||||

browser.execute_cdp_cmd(

|

||||

'Page.addScriptToEvaluateOnNewDocument',

|

||||

{'source': 'Object.defineProperty(navigator, "webdriver", {get: () => undefined})'}

|

||||

)

|

||||

browser.set_window_size(1200, 800)

|

||||

browser.get('https://www.baidu.com/')

|

||||

```

|

||||

|

||||

#### 无头浏览器

|

||||

|

||||

很多时候,我们在爬取数据时并不需要看到浏览器窗口,只要有 Chrome 浏览器以及对应的驱动程序,我们的爬虫就能够运转起来。如果不想看到浏览器窗口,我们可以通过下面的方式设置使用无头浏览器。

|

||||

|

||||

```Python

|

||||

options = webdriver.ChromeOptions()

|

||||

options.add_argument('--headless')

|

||||

browser = webdriver.Chrome(options=options)

|

||||

```

|

||||

|

||||

### API参考

|

||||

|

||||

Selenium 相关的知识还有很多,我们在此就不一一赘述了,下面为大家罗列一些浏览器对象和`WebElement`对象常用的属性和方法。具体的内容大家还可以参考 Selenium [官方文档的中文翻译](https://selenium-python-zh.readthedocs.io/en/latest/index.html)。

|

||||

|

||||

#### 浏览器对象

|

||||

|

||||

表1. 常用属性

|

||||

|

||||

| 属性名 | 描述 |

|

||||

| ----------------------- | -------------------------------- |

|

||||

| `current_url` | 当前页面的URL |

|

||||

| `current_window_handle` | 当前窗口的句柄(引用) |

|

||||

| `name` | 浏览器的名称 |

|

||||

| `orientation` | 当前设备的方向(横屏、竖屏) |

|

||||

| `page_source` | 当前页面的源代码(包括动态内容) |

|

||||

| `title` | 当前页面的标题 |

|

||||

| `window_handles` | 浏览器打开的所有窗口的句柄 |

|

||||

|

||||

表2. 常用方法

|

||||

|

||||

| 方法名 | 描述 |

|

||||

| -------------------------------------- | ----------------------------------- |

|

||||

| `back` / `forward` | 在浏览历史记录中后退/前进 |

|

||||

| `close` / `quit` | 关闭当前浏览器窗口 / 退出浏览器实例 |

|

||||

| `get` | 加载指定 URL 的页面到浏览器中 |

|

||||

| `maximize_window` | 将浏览器窗口最大化 |

|

||||

| `refresh` | 刷新当前页面 |

|

||||

| `set_page_load_timeout` | 设置页面加载超时时间 |

|

||||

| `set_script_timeout` | 设置 JavaScript 执行超时时间 |

|

||||

| `implicit_wait` | 设置等待元素被找到或目标指令完成 |

|

||||

| `get_cookie` / `get_cookies` | 获取指定的Cookie / 获取所有Cookie |

|

||||

| `add_cookie` | 添加 Cookie 信息 |

|

||||

| `delete_cookie` / `delete_all_cookies` | 删除指定的 Cookie / 删除所有 Cookie |

|

||||

| `find_element` / `find_elements` | 查找单个元素 / 查找一系列元素 |

|

||||

|

||||

#### WebElement对象

|

||||

|

||||

表1. WebElement常用属性

|

||||

|

||||

| 属性名 | 描述 |

|

||||

| ---------- | -------------- |

|

||||

| `location` | 元素的位置 |

|

||||

| `size` | 元素的尺寸 |

|

||||

| `text` | 元素的文本内容 |

|

||||

| `id` | 元素的 ID |

|

||||

| `tag_name` | 元素的标签名 |

|

||||

|

||||

表2. 常用方法

|

||||

|

||||

| 方法名 | 描述 |

|

||||

| -------------------------------- | ------------------------------------ |

|

||||

| `clear` | 清空文本框或文本域中的内容 |

|

||||

| `click` | 点击元素 |

|

||||

| `get_attribute` | 获取元素的属性值 |

|

||||

| `is_displayed` | 判断元素对于用户是否可见 |

|

||||

| `is_enabled` | 判断元素是否处于可用状态 |

|

||||

| `is_selected` | 判断元素(单选框和复选框)是否被选中 |

|

||||

| `send_keys` | 模拟输入文本 |

|

||||

| `submit` | 提交表单 |

|

||||

| `value_of_css_property` | 获取指定的CSS属性值 |

|

||||

| `find_element` / `find_elements` | 获取单个子元素 / 获取一系列子元素 |

|

||||

| `screenshot` | 为元素生成快照 |

|

||||

|

||||

### 简单案例

|

||||

|

||||

下面的例子演示了如何使用 Selenium 从“360图片”网站搜索和下载图片。

|

||||

|

||||

```Python

|

||||

import os

|

||||

import time

|

||||

from concurrent.futures import ThreadPoolExecutor

|

||||

|

||||

import requests

|

||||

from selenium import webdriver

|

||||

from selenium.webdriver.common.by import By

|

||||

from selenium.webdriver.common.keys import Keys

|

||||

|

||||

DOWNLOAD_PATH = 'images/'

|

||||

|

||||

|

||||

def download_picture(picture_url: str):

|

||||

"""

|

||||

下载保存图片

|

||||

:param picture_url: 图片的URL

|

||||

"""

|

||||

filename = picture_url[picture_url.rfind('/') + 1:]

|

||||

resp = requests.get(picture_url)

|

||||

with open(os.path.join(DOWNLOAD_PATH, filename), 'wb') as file:

|

||||

file.write(resp.content)

|

||||

|

||||

|

||||

if not os.path.exists(DOWNLOAD_PATH):

|

||||

os.makedirs(DOWNLOAD_PATH)

|

||||

browser = webdriver.Chrome()

|

||||

browser.get('https://image.so.com/z?ch=beauty')

|

||||

browser.implicitly_wait(10)

|

||||

kw_input = browser.find_element(By.CSS_SELECTOR, 'input[name=q]')

|

||||

kw_input.send_keys('苍老师')

|

||||

kw_input.send_keys(Keys.ENTER)

|

||||

for _ in range(10):

|

||||

browser.execute_script(

|

||||

'document.documentElement.scrollTop = document.documentElement.scrollHeight'

|

||||

)

|

||||

time.sleep(1)

|

||||

imgs = browser.find_elements(By.CSS_SELECTOR, 'div.waterfall img')

|

||||

with ThreadPoolExecutor(max_workers=32) as pool:

|

||||

for img in imgs:

|

||||

pic_url = img.get_attribute('src')

|

||||

pool.submit(download_picture, pic_url)

|

||||

```

|

||||

|

||||

运行上面的代码,检查指定的目录下是否下载了根据关键词搜索到的图片。

|

||||

|

|

@ -0,0 +1,250 @@

|

|||

## 爬虫框架Scrapy简介

|

||||

|

||||

当你写了很多个爬虫程序之后,你会发现每次写爬虫程序时,都需要将页面获取、页面解析、爬虫调度、异常处理、反爬应对这些代码从头至尾实现一遍,这里面有很多工作其实都是简单乏味的重复劳动。那么,有没有什么办法可以提升我们编写爬虫代码的效率呢?答案是肯定的,那就是利用爬虫框架,而在所有的爬虫框架中,Scrapy 应该是最流行、最强大的框架。

|

||||

|

||||

### Scrapy 概述

|

||||

|

||||

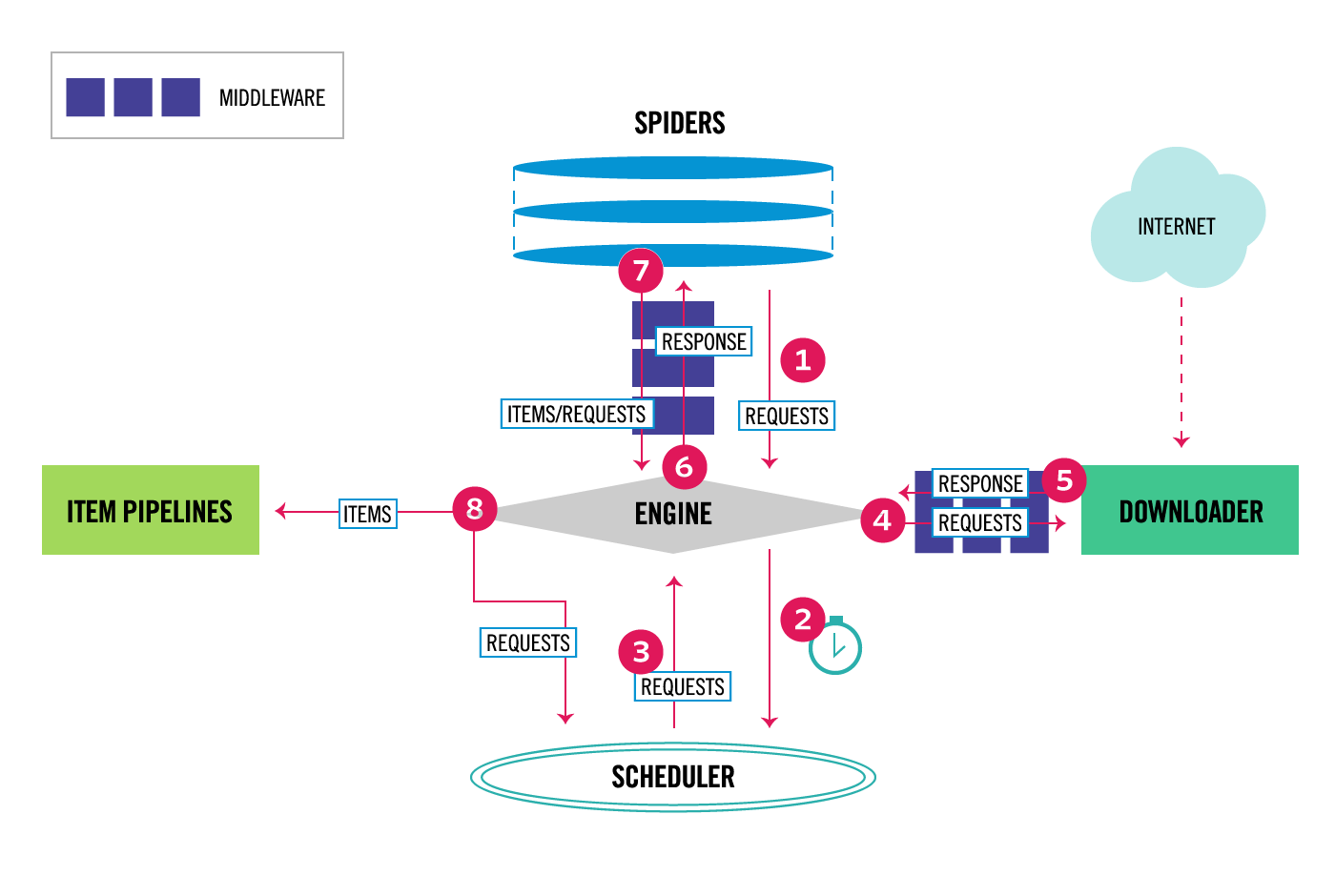

Scrapy 是基于 Python 的一个非常流行的网络爬虫框架,可以用来抓取 Web 站点并从页面中提取结构化的数据。下图展示了 Scrapy 的基本架构,其中包含了主要组件和系统的数据处理流程(图中带数字的红色箭头)。

|

||||

|

||||

|

||||

|

||||

#### Scrapy的组件

|

||||

|

||||

我们先来说说 Scrapy 中的组件。

|

||||

|

||||

1. Scrapy 引擎(Engine):用来控制整个系统的数据处理流程。

|

||||

2. 调度器(Scheduler):调度器从引擎接受请求并排序列入队列,并在引擎发出请求后返还给它们。

|

||||

3. 下载器(Downloader):下载器的主要职责是抓取网页并将网页内容返还给蜘蛛(Spiders)。

|

||||

4. 蜘蛛程序(Spiders):蜘蛛是用户自定义的用来解析网页并抓取特定URL的类,每个蜘蛛都能处理一个域名或一组域名,简单的说就是用来定义特定网站的抓取和解析规则的模块。

|

||||

5. 数据管道(Item Pipeline):管道的主要责任是负责处理有蜘蛛从网页中抽取的数据条目,它的主要任务是清理、验证和存储数据。当页面被蜘蛛解析后,将被发送到数据管道,并经过几个特定的次序处理数据。每个数据管道组件都是一个 Python 类,它们获取了数据条目并执行对数据条目进行处理的方法,同时还需要确定是否需要在数据管道中继续执行下一步或是直接丢弃掉不处理。数据管道通常执行的任务有:清理 HTML 数据、验证解析到的数据(检查条目是否包含必要的字段)、检查是不是重复数据(如果重复就丢弃)、将解析到的数据存储到数据库(关系型数据库或 NoSQL 数据库)中。

|

||||

6. 中间件(Middlewares):中间件是介于引擎和其他组件之间的一个钩子框架,主要是为了提供自定义的代码来拓展 Scrapy 的功能,包括下载器中间件和蜘蛛中间件。

|

||||

|

||||

#### 数据处理流程

|

||||

|

||||

Scrapy 的整个数据处理流程由引擎进行控制,通常的运转流程包括以下的步骤:

|

||||

|

||||

1. 引擎询问蜘蛛需要处理哪个网站,并让蜘蛛将第一个需要处理的 URL 交给它。

|

||||

|

||||

2. 引擎让调度器将需要处理的 URL 放在队列中。

|

||||

|

||||

3. 引擎从调度那获取接下来进行爬取的页面。

|

||||

|

||||

4. 调度将下一个爬取的 URL 返回给引擎,引擎将它通过下载中间件发送到下载器。

|

||||

|

||||

5. 当网页被下载器下载完成以后,响应内容通过下载中间件被发送到引擎;如果下载失败了,引擎会通知调度器记录这个 URL,待会再重新下载。

|

||||

|

||||

6. 引擎收到下载器的响应并将它通过蜘蛛中间件发送到蜘蛛进行处理。

|

||||

|

||||

7. 蜘蛛处理响应并返回爬取到的数据条目,此外还要将需要跟进的新的 URL 发送给引擎。

|

||||

|

||||

8. 引擎将抓取到的数据条目送入数据管道,把新的 URL 发送给调度器放入队列中。

|

||||

|

||||

上述操作中的第2步到第8步会一直重复直到调度器中没有需要请求的 URL,爬虫就停止工作。

|

||||

|

||||

### 安装和使用Scrapy

|

||||

|

||||

可以使用 Python 的包管理工具`pip`来安装 Scrapy。

|

||||

|

||||

```Shell

|

||||

pip install scrapy

|

||||

```

|

||||

|

||||

在命令行中使用`scrapy`命令创建名为`demo`的项目。

|

||||

|

||||

```Bash

|

||||

scrapy startproject demo

|

||||

```

|

||||

|

||||

项目的目录结构如下图所示。

|

||||

|

||||

```Shell

|

||||

demo

|

||||

|____ demo

|

||||

|________ spiders

|

||||

|____________ __init__.py

|

||||

|________ __init__.py

|

||||

|________ items.py

|

||||

|________ middlewares.py

|

||||

|________ pipelines.py

|

||||

|________ settings.py

|

||||

|____ scrapy.cfg

|

||||

```

|

||||

|

||||

切换到`demo` 目录,用下面的命令创建名为`douban`的蜘蛛程序。

|

||||

|

||||

```Bash

|

||||

scrapy genspider douban movie.douban.com

|

||||

```

|

||||

|

||||

#### 一个简单的例子

|

||||

|

||||

接下来,我们实现一个爬取豆瓣电影 Top250 电影标题、评分和金句的爬虫。

|

||||

|

||||

1. 在`items.py`的`Item`类中定义字段,这些字段用来保存数据,方便后续的操作。

|

||||

|

||||

```Python

|

||||

import scrapy

|

||||

|

||||

|

||||

class DoubanItem(scrapy.Item):

|

||||

title = scrapy.Field()

|

||||

score = scrapy.Field()

|

||||

motto = scrapy.Field()

|

||||

```

|

||||

|

||||

2. 修改`spiders`文件夹中名为`douban.py` 的文件,它是蜘蛛程序的核心,需要我们添加解析页面的代码。在这里,我们可以通过对`Response`对象的解析,获取电影的信息,代码如下所示。

|

||||

|

||||

```Python

|

||||

import scrapy

|

||||

from scrapy import Selector, Request

|

||||

from scrapy.http import HtmlResponse

|

||||

|

||||

from demo.items import MovieItem

|

||||

|

||||

|

||||

class DoubanSpider(scrapy.Spider):

|

||||

name = 'douban'

|

||||

allowed_domains = ['movie.douban.com']

|

||||

start_urls = ['https://movie.douban.com/top250?start=0&filter=']

|

||||

|

||||

def parse(self, response: HtmlResponse):

|

||||

sel = Selector(response)

|

||||

movie_items = sel.css('#content > div > div.article > ol > li')

|

||||

for movie_sel in movie_items:

|

||||

item = MovieItem()

|

||||

item['title'] = movie_sel.css('.title::text').extract_first()

|

||||

item['score'] = movie_sel.css('.rating_num::text').extract_first()

|

||||

item['motto'] = movie_sel.css('.inq::text').extract_first()

|

||||

yield item

|

||||

```

|

||||

通过上面的代码不难看出,我们可以使用 CSS 选择器进行页面解析。当然,如果你愿意也可以使用 XPath 或正则表达式进行页面解析,对应的方法分别是`xpath`和`re`。

|

||||

|

||||

如果还要生成后续爬取的请求,我们可以用`yield`产出`Request`对象。`Request`对象有两个非常重要的属性,一个是`url`,它代表了要请求的地址;一个是`callback`,它代表了获得响应之后要执行的回调函数。我们可以将上面的代码稍作修改。

|

||||

|

||||

```Python

|

||||

import scrapy

|

||||

from scrapy import Selector, Request

|

||||

from scrapy.http import HtmlResponse

|

||||

|

||||

from demo.items import MovieItem

|

||||

|

||||

|

||||

class DoubanSpider(scrapy.Spider):

|

||||

name = 'douban'

|

||||

allowed_domains = ['movie.douban.com']

|

||||

start_urls = ['https://movie.douban.com/top250?start=0&filter=']

|

||||

|

||||

def parse(self, response: HtmlResponse):

|

||||

sel = Selector(response)

|

||||

movie_items = sel.css('#content > div > div.article > ol > li')

|

||||

for movie_sel in movie_items:

|

||||

item = MovieItem()

|

||||

item['title'] = movie_sel.css('.title::text').extract_first()

|

||||

item['score'] = movie_sel.css('.rating_num::text').extract_first()

|

||||

item['motto'] = movie_sel.css('.inq::text').extract_first()

|

||||

yield item

|

||||

|

||||

hrefs = sel.css('#content > div > div.article > div.paginator > a::attr("href")')

|

||||

for href in hrefs:

|

||||

full_url = response.urljoin(href.extract())

|

||||

yield Request(url=full_url)

|

||||

```

|

||||

|

||||

到这里,我们已经可以通过下面的命令让爬虫运转起来。

|

||||

|

||||

```Shell

|

||||

scrapy crawl movie

|

||||

```

|

||||

|

||||

可以在控制台看到爬取到的数据,如果想将这些数据保存到文件中,可以通过`-o`参数来指定文件名,Scrapy 支持我们将爬取到的数据导出成 JSON、CSV、XML 等格式。

|

||||

|

||||

```Shell

|

||||

scrapy crawl moive -o result.json

|

||||

```

|

||||

|

||||

不知大家是否注意到,通过运行爬虫获得的 JSON 文件中有`275`条数据,那是因为首页被重复爬取了。要解决这个问题,可以对上面的代码稍作调整,不在`parse`方法中解析获取新页面的 URL,而是通过`start_requests`方法提前准备好待爬取页面的 URL,调整后的代码如下所示。

|

||||

|

||||

```Python

|

||||

import scrapy

|

||||

from scrapy import Selector, Request

|

||||

from scrapy.http import HtmlResponse

|

||||

|

||||

from demo.items import MovieItem

|

||||

|

||||

|

||||

class DoubanSpider(scrapy.Spider):

|

||||

name = 'douban'

|

||||

allowed_domains = ['movie.douban.com']

|

||||

|

||||

def start_requests(self):

|

||||

for page in range(10):

|

||||

yield Request(url=f'https://movie.douban.com/top250?start={page * 25}')

|

||||

|

||||

def parse(self, response: HtmlResponse):

|

||||

sel = Selector(response)

|

||||

movie_items = sel.css('#content > div > div.article > ol > li')

|

||||

for movie_sel in movie_items:

|

||||

item = MovieItem()

|

||||

item['title'] = movie_sel.css('.title::text').extract_first()

|

||||

item['score'] = movie_sel.css('.rating_num::text').extract_first()

|

||||

item['motto'] = movie_sel.css('.inq::text').extract_first()

|

||||

yield item

|

||||

```

|

||||

|

||||

3. 如果希望完成爬虫数据的持久化,可以在数据管道中处理蜘蛛程序产生的`Item`对象。例如,我们可以通过前面讲到的`openpyxl`操作 Excel 文件,将数据写入 Excel 文件中,代码如下所示。

|

||||

|

||||

```Python

|

||||

import openpyxl

|

||||

|

||||

from demo.items import MovieItem

|

||||

|

||||

|

||||

class MovieItemPipeline:

|

||||

|

||||

def __init__(self):

|

||||

self.wb = openpyxl.Workbook()

|

||||

self.sheet = self.wb.active

|

||||

self.sheet.title = 'Top250'

|

||||

self.sheet.append(('名称', '评分', '名言'))

|

||||

|

||||

def process_item(self, item: MovieItem, spider):

|

||||

self.sheet.append((item['title'], item['score'], item['motto']))

|

||||

return item

|

||||

|

||||

def close_spider(self, spider):

|

||||

self.wb.save('豆瓣电影数据.xlsx')

|

||||

```

|

||||

|

||||

上面的`process_item`和`close_spider`都是回调方法(钩子函数), 简单的说就是 Scrapy 框架会自动去调用的方法。当蜘蛛程序产生一个`Item`对象交给引擎时,引擎会将该`Item`对象交给数据管道,这时我们配置好的数据管道的`parse_item`方法就会被执行,所以我们可以在该方法中获取数据并完成数据的持久化操作。另一个方法`close_spider`是在爬虫结束运行前会自动执行的方法,在上面的代码中,我们在这个地方进行了保存 Excel 文件的操作,相信这段代码大家是很容易读懂的。

|

||||

|

||||

总而言之,数据管道可以帮助我们完成以下操作:

|

||||

|

||||

- 清理 HTML 数据,验证爬取的数据。

|

||||

- 丢弃重复的不必要的内容。

|

||||

- 将爬取的结果进行持久化操作。

|

||||

|

||||

4. 修改`settings.py`文件对项目进行配置,主要需要修改以下几个配置。

|

||||

|

||||

```Python

|

||||

# 用户浏览器

|

||||

USER_AGENT = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.159 Safari/537.36'

|

||||

|

||||

# 并发请求数量

|

||||

CONCURRENT_REQUESTS = 4

|

||||

|

||||

# 下载延迟

|

||||

DOWNLOAD_DELAY = 3

|

||||

# 随机化下载延迟

|

||||

RANDOMIZE_DOWNLOAD_DELAY = True

|

||||

|

||||

# 是否遵守爬虫协议

|

||||

ROBOTSTXT_OBEY = True

|

||||

|

||||

# 配置数据管道

|

||||

ITEM_PIPELINES = {

|

||||

'demo.pipelines.MovieItemPipeline': 300,

|

||||

}

|

||||

```

|

||||

|

||||

> **说明**:上面配置文件中的`ITEM_PIPELINES`选项是一个字典,可以配置多个处理数据的管道,后面的数字代表了执行的优先级,数字小的先执行。

|

||||

|

||||

|

|

@ -1,22 +0,0 @@

|

|||

import asyncio

|

||||

|

||||

|

||||

@asyncio.coroutine

|

||||

def countdown(name, num):

|

||||

while num > 0:

|

||||

print(f'Countdown[{name}]: {num}')

|

||||

yield from asyncio.sleep(1)

|

||||

num -= 1

|

||||

|

||||

|

||||

def main():

|

||||

loop = asyncio.get_event_loop()

|

||||

tasks = [

|

||||

countdown("A", 10), countdown("B", 5),

|

||||

]

|

||||

loop.run_until_complete(asyncio.wait(tasks))

|

||||

loop.close()

|

||||

|

||||

|

||||

if __name__ == '__main__':

|

||||

main()

|

||||

|

|

@ -1,29 +0,0 @@

|

|||

import asyncio

|

||||

import aiohttp

|

||||

|

||||

|

||||

async def download(url):

|

||||

print('Fetch:', url)

|

||||

async with aiohttp.ClientSession() as session:

|

||||

async with session.get(url) as resp:

|

||||

print(url, '--->', resp.status)

|

||||

print(url, '--->', resp.cookies)

|

||||

print('\n\n', await resp.text())

|

||||

|

||||

|

||||

def main():

|

||||

loop = asyncio.get_event_loop()

|

||||

urls = [

|

||||

'https://www.baidu.com',

|

||||

'http://www.sohu.com/',

|

||||

'http://www.sina.com.cn/',

|

||||

'https://www.taobao.com/',

|

||||

'https://www.jd.com/'

|

||||

]

|

||||

tasks = [download(url) for url in urls]

|

||||

loop.run_until_complete(asyncio.wait(tasks))

|

||||

loop.close()

|

||||

|

||||

|

||||

if __name__ == '__main__':

|

||||

main()

|

||||

|

|

@ -1,27 +0,0 @@

|

|||

from time import sleep

|

||||

|

||||

|

||||

def countdown_gen(n, consumer):

|

||||

consumer.send(None)

|

||||

while n > 0:

|

||||

consumer.send(n)

|

||||

n -= 1

|

||||

consumer.send(None)

|

||||

|

||||

|

||||

def countdown_con():

|

||||

while True:

|

||||

n = yield

|

||||

if n:

|

||||

print(f'Countdown {n}')

|

||||

sleep(1)

|

||||

else:

|

||||

print('Countdown Over!')

|

||||

|

||||

|

||||

def main():

|

||||

countdown_gen(5, countdown_con())

|

||||

|

||||

|

||||

if __name__ == '__main__':

|

||||

main()

|

||||

|

|

@ -1,42 +0,0 @@

|

|||

from time import sleep

|

||||

|

||||

from myutils import coroutine

|

||||

|

||||

|

||||

@coroutine

|

||||

def create_delivery_man(name, capacity=1):

|

||||

buffer = []

|

||||

while True:

|

||||

size = 0

|

||||

while size < capacity:

|

||||

pkg_name = yield

|

||||

if pkg_name:

|

||||

size += 1

|

||||

buffer.append(pkg_name)

|

||||

print('%s正在接受%s' % (name, pkg_name))

|

||||

else:

|

||||

break

|

||||

print('=====%s正在派送%d件包裹=====' % (name, len(buffer)))

|

||||

sleep(3)

|

||||

buffer.clear()

|

||||

|

||||

|

||||

def create_package_center(consumer, max_packages):

|

||||

num = 0

|

||||

while num <= max_packages:

|

||||

print('快递中心准备派送%d号包裹' % num)

|

||||

consumer.send('包裹-%d' % num)

|

||||

num += 1

|

||||

if num % 10 == 0:

|

||||

sleep(5)

|

||||

consumer.send(None)

|

||||

|

||||

|

||||

def main():

|

||||

print(create_delivery_man.__name__)

|

||||

dm = create_delivery_man('王大锤', 7)

|

||||

create_package_center(dm, 25)

|

||||

|

||||

|

||||

if __name__ == '__main__':

|

||||

main()

|

||||

|

|

@ -1,15 +0,0 @@

|

|||

# -*- coding: utf-8 -*-

|

||||

|

||||

# Define here the models for your scraped items

|

||||

#

|

||||

# See documentation in:

|

||||

# https://doc.scrapy.org/en/latest/topics/items.html

|

||||

|

||||

import scrapy

|

||||

|

||||

|

||||

class MovieItem(scrapy.Item):

|

||||

|

||||

title = scrapy.Field()

|

||||

score = scrapy.Field()

|

||||

motto = scrapy.Field()

|

||||

|

|

@ -1,103 +0,0 @@

|

|||

# -*- coding: utf-8 -*-

|

||||

|

||||

# Define here the models for your spider middleware

|

||||

#

|

||||

# See documentation in:

|

||||

# https://doc.scrapy.org/en/latest/topics/spider-middleware.html

|

||||

|

||||

from scrapy import signals

|

||||

|

||||

|

||||

class DoubanSpiderMiddleware(object):

|

||||

# Not all methods need to be defined. If a method is not defined,

|

||||

# scrapy acts as if the spider middleware does not modify the

|

||||

# passed objects.

|

||||

|

||||

@classmethod

|

||||

def from_crawler(cls, crawler):

|

||||

# This method is used by Scrapy to create your spiders.

|

||||

s = cls()

|

||||

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

|

||||

return s

|

||||

|

||||

def process_spider_input(self, response, spider):

|

||||

# Called for each response that goes through the spider

|

||||

# middleware and into the spider.

|

||||

|

||||

# Should return None or raise an exception.

|

||||

return None

|

||||

|

||||

def process_spider_output(self, response, result, spider):

|

||||

# Called with the results returned from the Spider, after

|

||||

# it has processed the response.

|

||||

|

||||

# Must return an iterable of Request, dict or Item objects.

|

||||

for i in result:

|

||||

yield i

|

||||

|

||||

def process_spider_exception(self, response, exception, spider):

|

||||

# Called when a spider or process_spider_input() method

|

||||

# (from other spider middleware) raises an exception.

|

||||

|

||||

# Should return either None or an iterable of Response, dict

|

||||

# or Item objects.

|

||||

pass

|

||||

|

||||

def process_start_requests(self, start_requests, spider):

|

||||

# Called with the start requests of the spider, and works

|

||||

# similarly to the process_spider_output() method, except

|

||||

# that it doesn’t have a response associated.

|

||||

|

||||

# Must return only requests (not items).

|

||||

for r in start_requests:

|

||||

yield r

|

||||

|

||||

def spider_opened(self, spider):

|

||||

spider.logger.info('Spider opened: %s' % spider.name)

|

||||

|

||||

|

||||

class DoubanDownloaderMiddleware(object):

|

||||

# Not all methods need to be defined. If a method is not defined,

|

||||

# scrapy acts as if the downloader middleware does not modify the

|

||||

# passed objects.

|

||||

|

||||

@classmethod

|

||||

def from_crawler(cls, crawler):

|

||||

# This method is used by Scrapy to create your spiders.

|

||||

s = cls()

|

||||

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

|

||||

return s

|

||||

|

||||

def process_request(self, request, spider):

|

||||

# Called for each request that goes through the downloader

|

||||

# middleware.

|

||||

|

||||

# Must either:

|

||||

# - return None: continue processing this request

|

||||

# - or return a Response object

|

||||

# - or return a Request object

|

||||

# - or raise IgnoreRequest: process_exception() methods of

|

||||

# installed downloader middleware will be called

|

||||

request.meta['proxy'] = 'http://144.52.232.155:80'

|

||||

|

||||

def process_response(self, request, response, spider):

|

||||

# Called with the response returned from the downloader.

|

||||

|

||||

# Must either;

|

||||

# - return a Response object

|

||||

# - return a Request object

|

||||

# - or raise IgnoreRequest

|

||||

return response

|

||||

|

||||

def process_exception(self, request, exception, spider):

|

||||

# Called when a download handler or a process_request()

|

||||

# (from other downloader middleware) raises an exception.

|

||||

|

||||

# Must either:

|

||||

# - return None: continue processing this exception

|

||||

# - return a Response object: stops process_exception() chain

|

||||

# - return a Request object: stops process_exception() chain

|

||||

pass

|

||||

|

||||

def spider_opened(self, spider):

|

||||

spider.logger.info('Spider opened: %s' % spider.name)

|

||||

|

|

@ -1,20 +0,0 @@

|

|||

# -*- coding: utf-8 -*-

|

||||

|

||||

# Define your item pipelines here

|

||||

#

|

||||

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

|

||||

# See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html

|

||||

|

||||

|

||||

class DoubanPipeline(object):

|

||||

|

||||

# def __init__(self, server, port):

|

||||

# pass

|

||||

|

||||

# @classmethod

|

||||

# def from_crawler(cls, crawler):

|

||||

# return cls(crawler.settings['MONGO_SERVER'],

|

||||

# crawler.settings['MONGO_PORT'])

|

||||

|

||||

def process_item(self, item, spider):

|

||||

return item

|

||||

|

|

@ -1,94 +0,0 @@

|

|||

# -*- coding: utf-8 -*-

|

||||

|

||||

# Scrapy settings for douban project

|

||||

#

|

||||

# For simplicity, this file contains only settings considered important or

|

||||

# commonly used. You can find more settings consulting the documentation:

|

||||

#

|

||||

# https://doc.scrapy.org/en/latest/topics/settings.html

|

||||

# https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

|

||||

# https://doc.scrapy.org/en/latest/topics/spider-middleware.html

|

||||

|

||||

BOT_NAME = 'douban'

|

||||

|

||||

MONGO_SERVER = '120.77.222.217'

|

||||

MONGO_PORT = 27017

|

||||

|

||||

SPIDER_MODULES = ['douban.spiders']

|

||||

NEWSPIDER_MODULE = 'douban.spiders'

|

||||

|

||||

|

||||

# Crawl responsibly by identifying yourself (and your website) on the user-agent

|

||||

USER_AGENT = 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) ' \

|

||||

'Chrome/65.0.3325.181 Safari/537.36'

|

||||

|

||||

# Obey robots.txt rules

|

||||

ROBOTSTXT_OBEY = False

|

||||

|

||||

# Configure maximum concurrent requests performed by Scrapy (default: 16)

|

||||

CONCURRENT_REQUESTS = 2

|

||||

|

||||

# Configure a delay for requests for the same website (default: 0)

|

||||

# See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay

|

||||

# See also autothrottle settings and docs

|

||||

DOWNLOAD_DELAY = 5

|

||||

# The download delay setting will honor only one of:

|

||||

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

|

||||

#CONCURRENT_REQUESTS_PER_IP = 16

|

||||

|

||||

# Disable cookies (enabled by default)

|

||||

#COOKIES_ENABLED = False

|

||||

|

||||

# Disable Telnet Console (enabled by default)

|

||||

#TELNETCONSOLE_ENABLED = False

|

||||

|

||||

# Override the default request headers:

|

||||

#DEFAULT_REQUEST_HEADERS = {

|

||||

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

|

||||

# 'Accept-Language': 'en',

|

||||

#}

|

||||

|

||||

# Enable or disable spider middlewares

|

||||

# See https://doc.scrapy.org/en/latest/topics/spider-middleware.html

|

||||

#SPIDER_MIDDLEWARES = {

|

||||

# 'douban.middlewares.DoubanSpiderMiddleware': 543,

|

||||

#}

|

||||

|

||||

# Enable or disable downloader middlewares

|

||||

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

|

||||

DOWNLOADER_MIDDLEWARES = {

|

||||

'douban.middlewares.DoubanDownloaderMiddleware': 543,

|

||||

}

|

||||

|

||||

# Enable or disable extensions

|

||||

# See https://doc.scrapy.org/en/latest/topics/extensions.html

|

||||

#EXTENSIONS = {

|

||||

# 'scrapy.extensions.telnet.TelnetConsole': None,

|

||||

#}

|

||||

|

||||

# Configure item pipelines

|

||||

# See https://doc.scrapy.org/en/latest/topics/item-pipeline.html

|

||||

ITEM_PIPELINES = {

|

||||

'douban.pipelines.DoubanPipeline': 300,

|

||||

}

|

||||

|

||||

# Enable and configure the AutoThrottle extension (disabled by default)

|

||||

# See https://doc.scrapy.org/en/latest/topics/autothrottle.html

|

||||

#AUTOTHROTTLE_ENABLED = True

|

||||

# The initial download delay

|

||||

#AUTOTHROTTLE_START_DELAY = 5

|

||||

# The maximum download delay to be set in case of high latencies

|

||||

#AUTOTHROTTLE_MAX_DELAY = 60

|

||||

# The average number of requests Scrapy should be sending in parallel to

|

||||

# each remote server

|

||||

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

|

||||

# Enable showing throttling stats for every response received:

|

||||

#AUTOTHROTTLE_DEBUG = False

|

||||

|

||||

# Enable and configure HTTP caching (disabled by default)

|

||||

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

|

||||

#HTTPCACHE_ENABLED = True

|

||||

#HTTPCACHE_EXPIRATION_SECS = 0

|

||||

#HTTPCACHE_DIR = 'httpcache'

|

||||

#HTTPCACHE_IGNORE_HTTP_CODES = []

|

||||

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

|

||||

|

|

@ -1,4 +0,0 @@

|

|||

# This package will contain the spiders of your Scrapy project

|

||||

#

|

||||

# Please refer to the documentation for information on how to create and manage

|

||||

# your spiders.

|

||||

|

|

@ -1,23 +0,0 @@

|

|||

# -*- coding: utf-8 -*-

|

||||

import scrapy

|

||||

|

||||

from douban.items import MovieItem

|

||||

|

||||

|

||||

class MovieSpider(scrapy.Spider):

|

||||

name = 'movie'

|

||||

allowed_domains = ['movie.douban.com']

|

||||

start_urls = ['https://movie.douban.com/top250']

|

||||

|

||||

def parse(self, response):

|

||||

li_list = response.xpath('//*[@id="content"]/div/div[1]/ol/li')

|

||||

for li in li_list:

|

||||

item = MovieItem()

|

||||

item['title'] = li.xpath('div/div[2]/div[1]/a/span[1]/text()').extract_first()

|

||||

item['score'] = li.xpath('div/div[2]/div[2]/div/span[2]/text()').extract_first()

|

||||

item['motto'] = li.xpath('div/div[2]/div[2]/p[2]/span/text()').extract_first()

|

||||

yield item

|

||||

href_list = response.css('a[href]::attr("href")').re('\?start=.*')

|

||||

for href in href_list:

|

||||

url = response.urljoin(href)

|

||||

yield scrapy.Request(url=url, callback=self.parse)

|

||||

|

|

@ -1,11 +0,0 @@

|

|||

# Automatically created by: scrapy startproject

|

||||

#

|

||||

# For more information about the [deploy] section see:

|

||||

# https://scrapyd.readthedocs.io/en/latest/deploy.html

|

||||

|

||||

[settings]

|

||||

default = douban.settings

|

||||

|

||||

[deploy]

|

||||

#url = http://localhost:6800/

|

||||

project = douban

|

||||

|

|

@ -1,84 +0,0 @@

|

|||

from urllib.error import URLError

|

||||

from urllib.request import urlopen

|

||||

|

||||

import re

|

||||

import pymysql

|

||||

import ssl

|

||||

|

||||

from pymysql import Error

|

||||

|

||||

|

||||

# 通过指定的字符集对页面进行解码(不是每个网站都将字符集设置为utf-8)

|

||||

def decode_page(page_bytes, charsets=('utf-8',)):

|

||||

page_html = None

|

||||

for charset in charsets:

|

||||

try:

|

||||

page_html = page_bytes.decode(charset)

|

||||

break

|

||||

except UnicodeDecodeError:

|

||||

pass

|

||||

# logging.error('Decode:', error)

|

||||

return page_html

|

||||

|

||||

|

||||

# 获取页面的HTML代码(通过递归实现指定次数的重试操作)

|

||||

def get_page_html(seed_url, *, retry_times=3, charsets=('utf-8',)):

|

||||

page_html = None

|

||||

try:

|

||||

page_html = decode_page(urlopen(seed_url).read(), charsets)

|

||||

except URLError:

|

||||

# logging.error('URL:', error)

|

||||

if retry_times > 0:

|

||||

return get_page_html(seed_url, retry_times=retry_times - 1,

|

||||

charsets=charsets)

|

||||

return page_html

|

||||

|

||||

|

||||

# 从页面中提取需要的部分(通常是链接也可以通过正则表达式进行指定)

|

||||

def get_matched_parts(page_html, pattern_str, pattern_ignore_case=re.I):

|

||||

pattern_regex = re.compile(pattern_str, pattern_ignore_case)

|

||||

return pattern_regex.findall(page_html) if page_html else []

|

||||

|

||||

|

||||

# 开始执行爬虫程序并对指定的数据进行持久化操作

|

||||

def start_crawl(seed_url, match_pattern, *, max_depth=-1):

|

||||

conn = pymysql.connect(host='localhost', port=3306,

|

||||

database='crawler', user='root',

|

||||

password='123456', charset='utf8')

|

||||

try:

|

||||

with conn.cursor() as cursor:

|

||||

url_list = [seed_url]

|

||||

visited_url_list = {seed_url: 0}

|

||||

while url_list:

|

||||

current_url = url_list.pop(0)

|

||||

depth = visited_url_list[current_url]

|

||||

if depth != max_depth:

|

||||

page_html = get_page_html(current_url, charsets=('utf-8', 'gbk', 'gb2312'))

|

||||

links_list = get_matched_parts(page_html, match_pattern)

|

||||

param_list = []

|

||||

for link in links_list:

|

||||

if link not in visited_url_list:

|

||||

visited_url_list[link] = depth + 1

|

||||

page_html = get_page_html(link, charsets=('utf-8', 'gbk', 'gb2312'))

|

||||

headings = get_matched_parts(page_html, r'<h1>(.*)<span')

|

||||

if headings:

|

||||

param_list.append((headings[0], link))

|

||||

cursor.executemany('insert into tb_result values (default, %s, %s)',

|

||||

param_list)

|

||||

conn.commit()

|

||||

except Error:

|

||||

pass

|

||||

# logging.error('SQL:', error)

|

||||

finally:

|

||||

conn.close()

|

||||

|

||||

|

||||

def main():

|

||||

ssl._create_default_https_context = ssl._create_unverified_context

|

||||

start_crawl('http://sports.sohu.com/nba_a.shtml',

|

||||

r'<a[^>]+test=a\s[^>]*href=["\'](.*?)["\']',

|

||||

max_depth=2)

|

||||

|

||||

|

||||

if __name__ == '__main__':

|

||||

main()

|

||||

|

|

@ -1,71 +0,0 @@

|

|||

from bs4 import BeautifulSoup

|

||||

|

||||

import re

|

||||

|

||||

|

||||

def main():

|

||||

html = """

|

||||

<!DOCTYPE html>

|

||||

<html lang="en">

|

||||

<head>

|

||||

<meta charset="UTF-8">

|

||||

<title>首页</title>

|

||||

</head>

|

||||

<body>

|

||||

<h1>Hello, world!</h1>

|

||||

<p>这是一个<em>神奇</em>的网站!</p>

|

||||

<hr>

|

||||

<div>

|

||||

<h2>这是一个例子程序</h2>

|

||||

<p>静夜思</p>

|

||||

<p class="foo">床前明月光</p>

|

||||

<p id="bar">疑似地上霜</p>

|

||||

<p class="foo">举头望明月</p>

|

||||

<div><a href="http://www.baidu.com"><p>低头思故乡</p></a></div>

|

||||

</div>

|

||||

<a class="foo" href="http://www.qq.com">腾讯网</a>

|

||||

<img src="./img/pretty-girl.png" alt="美女">

|

||||

<img src="./img/hellokitty.png" alt="凯蒂猫">

|

||||

<img src="/static/img/pretty-girl.png" alt="美女">

|

||||

<table>

|

||||

<tr>

|

||||

<th>姓名</th>

|

||||

<th>上场时间</th>

|

||||

<th>得分</th>

|

||||

<th>篮板</th>

|

||||

<th>助攻</th>

|

||||

</tr>

|

||||

</table>

|

||||

</body>

|

||||

</html>

|

||||

"""

|

||||

soup = BeautifulSoup(html, 'lxml')

|

||||

# JavaScript - document.title

|

||||

print(soup.title)

|

||||

# JavaScript - document.body.h1

|

||||

print(soup.body.h1)

|

||||

print(soup.p)

|

||||

print(soup.body.p.text)

|

||||

print(soup.body.p.contents)

|

||||

for p_child in soup.body.p.children:

|

||||

print(p_child)

|

||||

print(len([elem for elem in soup.body.children]))

|

||||

print(len([elem for elem in soup.body.descendants]))

|

||||

print(soup.findAll(re.compile(r'^h[1-6]')))

|

||||

print(soup.body.find_all(r'^h'))

|

||||

print(soup.body.div.find_all(re.compile(r'^h')))

|

||||

print(soup.find_all(re.compile(r'r$')))

|

||||

print(soup.find_all('img', {'src': re.compile(r'\./img/\w+.png')}))

|

||||

print(soup.find_all(lambda x: len(x.attrs) == 2))

|

||||

print(soup.find_all(foo))

|

||||

print(soup.find_all('p', {'class': 'foo'}))

|

||||

for elem in soup.select('a[href]'):

|

||||

print(elem.attrs['href'])

|

||||

|

||||

|

||||

def foo(elem):

|

||||

return len(elem.attrs) == 2

|

||||

|

||||

|

||||

if __name__ == '__main__':

|

||||

main()

|

||||

|

|

@ -1,27 +0,0 @@

|

|||

from bs4 import BeautifulSoup

|

||||

|

||||

import requests

|

||||

|

||||

import re

|

||||

|

||||

|

||||

def main():

|

||||

# 通过requests第三方库的get方法获取页面

|

||||

resp = requests.get('http://sports.sohu.com/nba_a.shtml')

|

||||

# 对响应的字节串(bytes)进行解码操作(搜狐的部分页面使用了GBK编码)

|

||||

html = resp.content.decode('gbk')

|

||||

# 创建BeautifulSoup对象来解析页面(相当于JavaScript的DOM)

|

||||

bs = BeautifulSoup(html, 'lxml')

|

||||

# 通过CSS选择器语法查找元素并通过循环进行处理

|

||||

# for elem in bs.find_all(lambda x: 'test' in x.attrs):

|

||||

for elem in bs.select('a[test]'):

|

||||

# 通过attrs属性(字典)获取元素的属性值

|

||||

link_url = elem.attrs['href']

|

||||

resp = requests.get(link_url)

|

||||

bs_sub = BeautifulSoup(resp.text, 'lxml')

|

||||

# 使用正则表达式对获取的数据做进一步的处理

|

||||

print(re.sub(r'[\r\n]', '', bs_sub.find('h1').text))

|

||||

|

||||

|

||||

if __name__ == '__main__':

|

||||

main()

|

||||

|

|

@ -1,31 +0,0 @@

|

|||

from urllib.parse import urljoin

|

||||

|

||||

import re

|

||||

import requests

|

||||

|

||||

from bs4 import BeautifulSoup

|

||||

|

||||

|

||||

def main():

|

||||

headers = {'user-agent': 'Baiduspider'}

|

||||

proxies = {

|

||||

'http': 'http://122.114.31.177:808'

|

||||

}

|

||||

base_url = 'https://www.zhihu.com/'

|

||||

seed_url = urljoin(base_url, 'explore')

|

||||

resp = requests.get(seed_url,

|

||||

headers=headers,

|

||||

proxies=proxies)

|

||||

soup = BeautifulSoup(resp.text, 'lxml')

|

||||

href_regex = re.compile(r'^/question')

|

||||

link_set = set()

|

||||

for a_tag in soup.find_all('a', {'href': href_regex}):

|

||||

if 'href' in a_tag.attrs:

|

||||

href = a_tag.attrs['href']

|

||||

full_url = urljoin(base_url, href)

|

||||

link_set.add(full_url)

|

||||

print('Total %d question pages found.' % len(link_set))

|

||||

|

||||

|

||||

if __name__ == '__main__':

|

||||

main()

|

||||

|

|

@ -1,83 +0,0 @@

|

|||

from urllib.error import URLError

|

||||

from urllib.request import urlopen

|

||||

|

||||

import re

|

||||

import redis

|

||||

import ssl

|

||||

import hashlib

|

||||

import logging

|

||||

import pickle

|

||||

import zlib

|

||||

|

||||

# Redis有两种持久化方案

|

||||

# 1. RDB

|

||||

# 2. AOF

|

||||

|

||||

|

||||

# 通过指定的字符集对页面进行解码(不是每个网站都将字符集设置为utf-8)

|

||||

def decode_page(page_bytes, charsets=('utf-8',)):

|

||||

page_html = None

|

||||

for charset in charsets:

|

||||

try:

|

||||

page_html = page_bytes.decode(charset)

|

||||

break

|

||||

except UnicodeDecodeError:

|

||||

pass

|

||||

# logging.error('[Decode]', err)

|

||||

return page_html

|

||||

|

||||

|

||||

# 获取页面的HTML代码(通过递归实现指定次数的重试操作)

|

||||

def get_page_html(seed_url, *, retry_times=3, charsets=('utf-8',)):

|

||||

page_html = None

|

||||

try:

|

||||

if seed_url.startswith('http://') or \

|

||||

seed_url.startswith('https://'):

|

||||

page_html = decode_page(urlopen(seed_url).read(), charsets)

|

||||

except URLError as err:

|

||||

logging.error('[URL]', err)

|

||||